Alright, I admit it – I dropped the ball. I’ve been way wrapped up in DOing VR sound design and totally neglecting the task of blogging about it. In honor of that, I thought I’d write about creating sounds tied to in-game physics… like a bouncing ball.

What “touch controllers” mean for sound design.

With VR systems like the Oculus and Vive, there are new controllers (one for each hand) which allow for players to interact with objects in a much more realistic way than previously possible – you can pick things up, drop them, throw them, etc. Of course, all of these objects will probably need sound, and it’s impossible to predict where or how they’ll collide with things (will the player drop it straight down? throw it across the room? roll it down some stairs?). The most realistic way to design sound for these is to tie-in sounds to the actual physics of the game.

If the thought of tackling that complexity is getting you down, I’m here to offer you a cure for your depression – a little thing I call “The SSRI.”

Simple System for Realistic Impacts (SSRI)

Categories:

The SSRI breaks sounds for an object down into 4 categories: Hard, Medium, Soft, and Stop. The first three should correspond to hard, medium, and soft impacts for the object, hard being the loudest and each next category quieter. The “Stop” category is the final sound an object makes as it comes to rest (often a few quick subtle impacts and/or possibly a sliding sound, depending on the object).

Variation:

Since it’s likely an object might get knocked around more than once, we want to make sure there’s enough variety in the sound so that it doesn’t sound the same every time. For that we do 2 things:

- Make a handful of 6-10 similar (but distinct) sounds for each category, and set them up to be randomly selected when called

- Set up a little pitch/volume modulation per instance (2-5% +/- usually does the trick) to add greater variance.

Even with a minimum of 6 sounds per category, that’s 24 raw sounds, and coupled with the modulation it should be enough variety to make any repeats of sounds unlikely to be detected.

Physics:

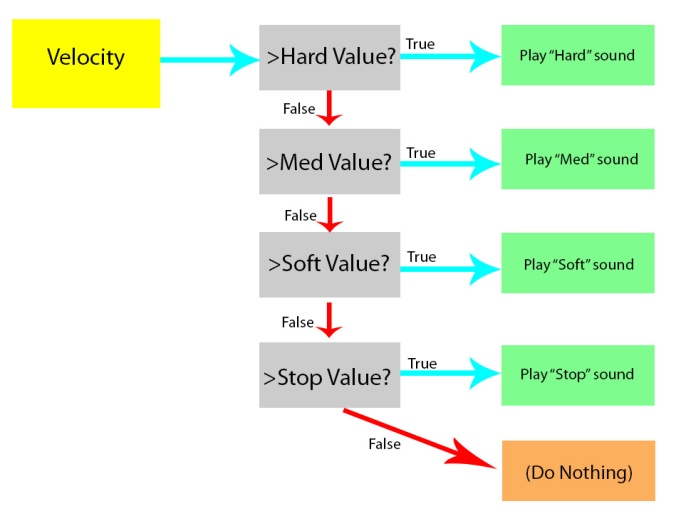

Now, we have to do a bit of programming to tell the machine how to use these sounds. Whenever we get a hit event for the object colliding with something, we need one of these sounds. Which one we need will depend on how hard it hits, which we can determine via its velocity. Once we know that, our logic will look something like this:

Upon impact, we check to see if the velocity is greater than our lower-threshold for playing the “Hard” sound. If it isn’t, we check if it’s greater than our threshold for playing “Medium,” and so forth. When our check is “true,” the corresponding sound fires.

A few notes:

– Getting the best values to make the switch between these may take some trial and error, depending on settings (e.g. the assigned mass of the object), but so far I’ve found that once you have them they tend to work pretty well for similar objects.

– It’s important to make sure the threshold for “Stop” is a bit greater than 0. If it’s too low, it’s possible to get a bunch of collisions (and from that, a bunch of noise from sounds firing on those collisions).

– It may be worthwhile to put a time-delay on how quickly these can re-fire (I find something in the range of 0.1 to 0.2 seconds seems to work pretty well). This makes sure that, if there happen to be multiple collisions happening very quickly, you don’t get weird glitchy machine gun rapid-fire of your sounds.

– When I originally built this, I had the thought to modulate the volume based on velocity as well. When I got it built this far, though, it worked so well that doing so didn’t seem necessary, and I opted to keep it simple. Your mileage may vary.

Wrapping up

So that’s it. Pretty simple, right? I hope The SSRI helps some of you out there in your sound design quests. I’ll be talking more about touch controllers and the unique sound design situations that come about as a result of them in the next post.